Publications

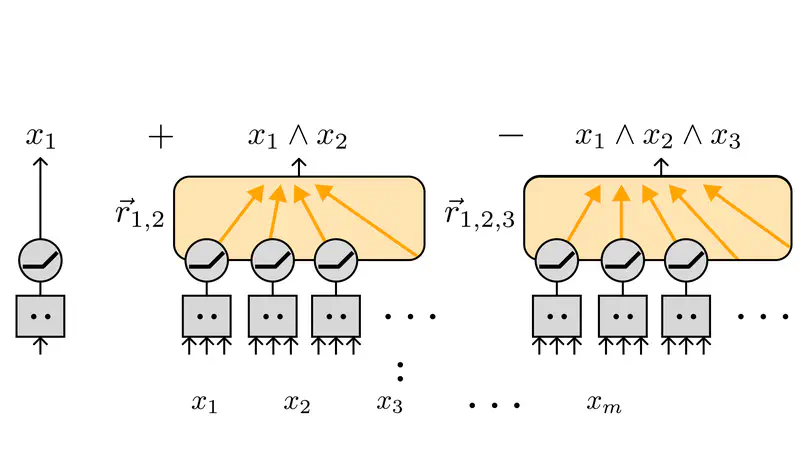

We formally study what sparse circuits neural networks can represent and compute in superposition – that is, using sparse features respresented near-orthogonally in a high-dimensional space. We show that not only can ReLU MLPs perform more computation at a fixed given width by placing features in superposition, but also that they can maintain superposition through multiple layers.

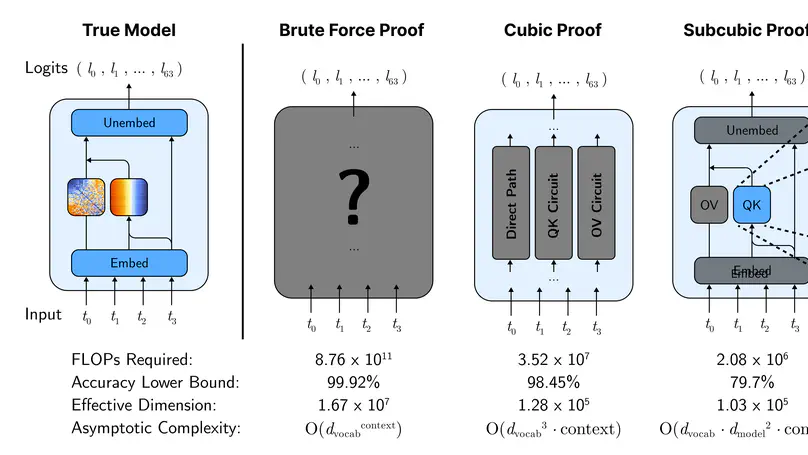

We use mechanistic interpretability to general compact formal guarantees on model performance on a toy Max-of-K task. We find that shorter proofs seem to require more mechanistic understanding and more faithful mechanistic understanding leads to tighter performance bounds. We identify compounding structureless noise as a key challenge for the proofs approach.

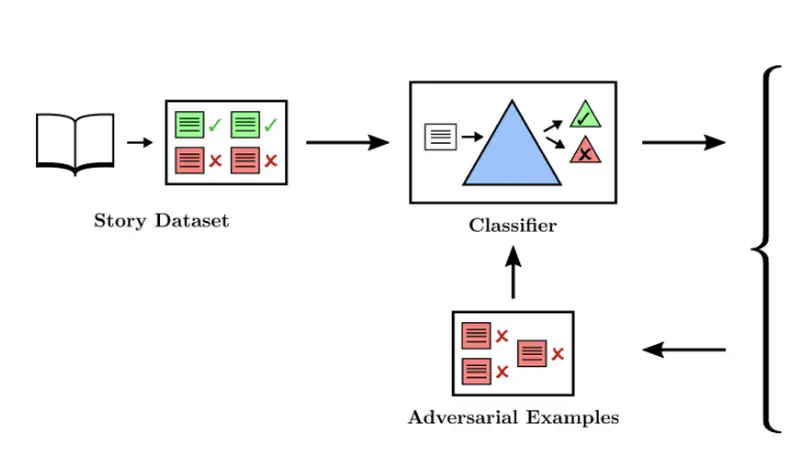

We study the limits of adversarial training on a injury classification task using a variety of attacks including a new saliency map–based snippet rewrite tool to assist our human adversaries. While advesarial training was not able to eliminate all in-distribution failures, it did increase robustness to the adversarial attacks that we trained on.