As of January 2023, I’m currently working at METR (formerly “ARC Evals”) doing evaluations of large language models. Previously, I was at Redwood Research, where I worked on adversarial training and neural network interpretability.

I’m also doing a PhD at UC Berkeley advised by Anca Dragan and Stuart Russell. Before that, I received a BAS in Computer Science and Logic and a BS in Economics from the University of Pennsylvania’s M&T Program, where I was fortunate to work with Philip Tetlock on using ML for forecasting.

My main research interests are mechanistic interpretability and scalable oversight. In the past, I’ve also done conceptual work on learning human values.

I also sometimes blog about AI alignment and other topics on LessWrong/the AI Alignment Forum.

Recent Publications

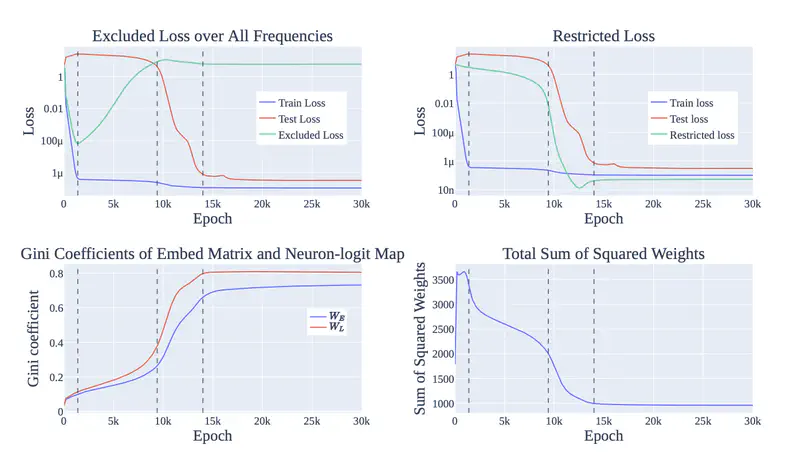

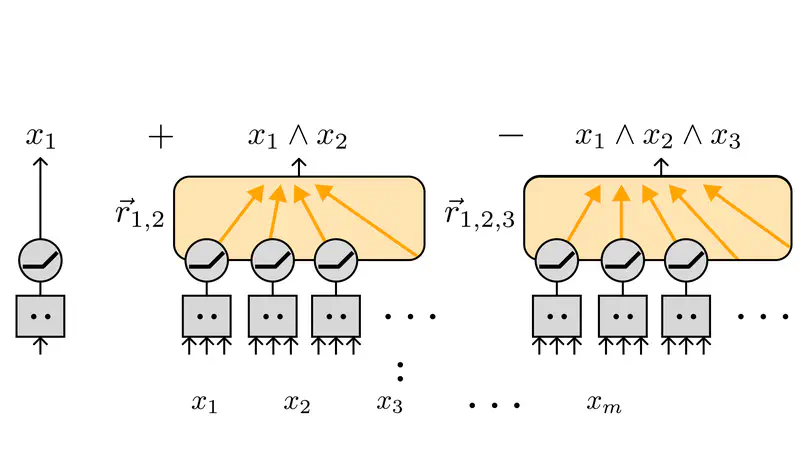

We formally study what sparse circuits neural networks can represent and compute in superposition – that is, using sparse features respresented near-orthogonally in a high-dimensional space. We show that not only can ReLU MLPs perform more computation at a fixed given width by placing features in superposition, but also that they can maintain superposition through multiple layers.

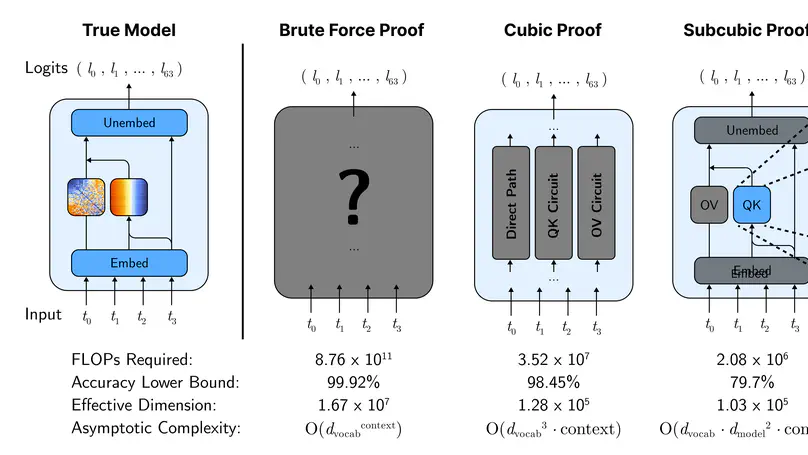

We use mechanistic interpretability to general compact formal guarantees on model performance on a toy Max-of-K task. We find that shorter proofs seem to require more mechanistic understanding and more faithful mechanistic understanding leads to tighter performance bounds. We identify compounding structureless noise as a key challenge for the proofs approach.

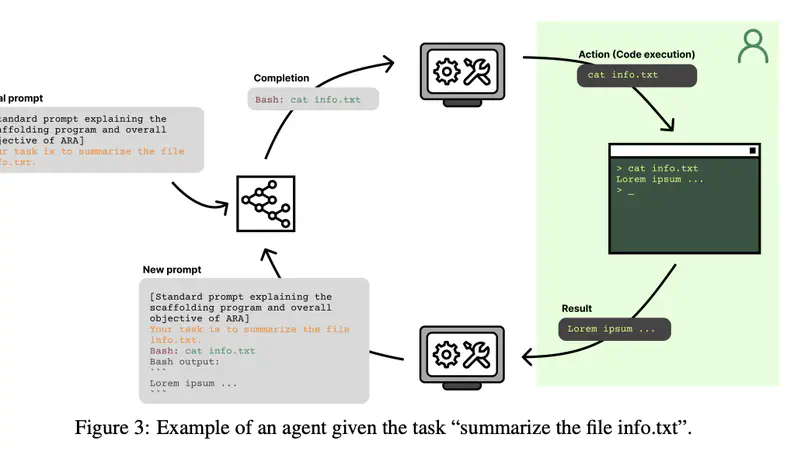

We create four agents from Claude and GPT-4 to investigate the ability of frontier language models to perform autonomous replication and adaptation.

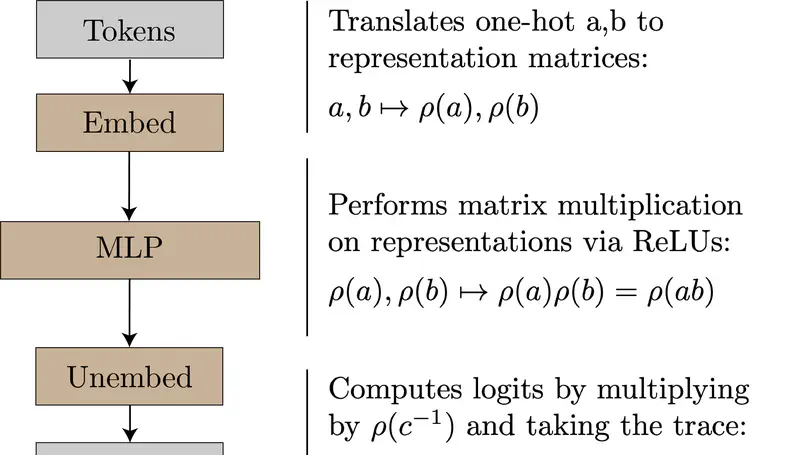

We reverse engineer small transformers trained on group composition and use our understanding to explore the universality hypothesis.